Top Brands Trust Us

What is a Technical SEO service and why do you need it for your website?

Technical SEO is the practice of improving your website so that search engines can read and understand it better.

Good technical SEO will make your site faster, easier to navigate, and more efficient.

This way, visitors will have a better experience.

A well-optimized site can also lead to more visitors and potential customers.

Technical SEO services are all about making your website better for search engines.

It helps your site show up higher in search results.

A better ranking brings more organic traffic to your website.

Regular checks help find and fix problems like duplicate content and slow page speeds.

Investing in technical SEO services is important for anyone who wants to boost their website’s performance.

Technical SEO can make your search rankings better.

Technical SEO upgrades site speed, crawlability, and indexation.

This helps search engines reach your content easily and understand it better.

Fixing broken links and improving code with the help of a technical SEO consultant in Dublin makes things work better for your website and for users.

Using proper schema mark-up helps search engines read content.

This often leads to rich snippets that can increase click rates.

Fixing technical errors stops penalties that can hurt rankings.

Being mobile-friendly meets the needs of more mobile users, keeping visibility in search results.

A strong technical SEO base helps apply other SEO methods.

Google and Bing focus on websites that are well-optimized in their systems.

If you apply good technical SEO practices, your site will perform better in search results, especially with guidance from an SEO consultant.

How Do We Conduct a Technical SEO Audit? What SEO Tools Are We Using?

Use tools like Screaming Frog, Ahrefs, or Semrush.

These tools check site speed, crawlability, and indexation.

They find important problems that can hurt website performance.

It helps us make a plan to make our search engine visibility better..

A complete analysis looks at many parts. It checks the site layout, URL setup, and internal linking.

It also looks for broken links, duplicate content, and whether the site works well on mobile devices.

The insights from the audit helped boost the website.

This can lead to higher search engine rankings and more visitors.

Our technical SEO Services for Your Website

Screaming Frog Audit

Screaming Frog is a tool used for SEO that crawls websites to find issues or areas for improvement.

It provides insights about broken links, duplicate content, and more.

This information is useful for making websites better and easier to find.

Screaming Frog is a tool that helps check websites for technical SEO issues.

It looks for important SEO parts like broken links, copied content, and missing metadata.

It looks at page titles, headings, and image text.

It finds problems like slow-loading pages and too many redirects.

This allows users to make specific changes and can boost user experience and boost search rankings.

Screaming Frog looks at URLs to find crawl errors and parts that need improvement.

This detailed method helps fix quick SEO problems and helps keep the website healthy in the long run.

Ahrefs Technical Audit

A technical SEO audit using Ahrefs including checking the site, analyzing performance, and looking at the website’s structure.

Start by running a site crawl.

This helps you find broken links, duplicate content, and indexing problems.

Check how well the site is performing.

Pay attention to its site speed and how well it works on mobile devices.

Check for the right schema markup and how structured data is used.

Check how easy it is for users and crawlers to move around the website.

This way fixes today’s technical problems. It also sets up a base for SEO work in the future.

Ahrefs’ tools help find important problems blocking site performance.

The process checks how long it takes to load.

This makes sure visitors have a smooth experience.

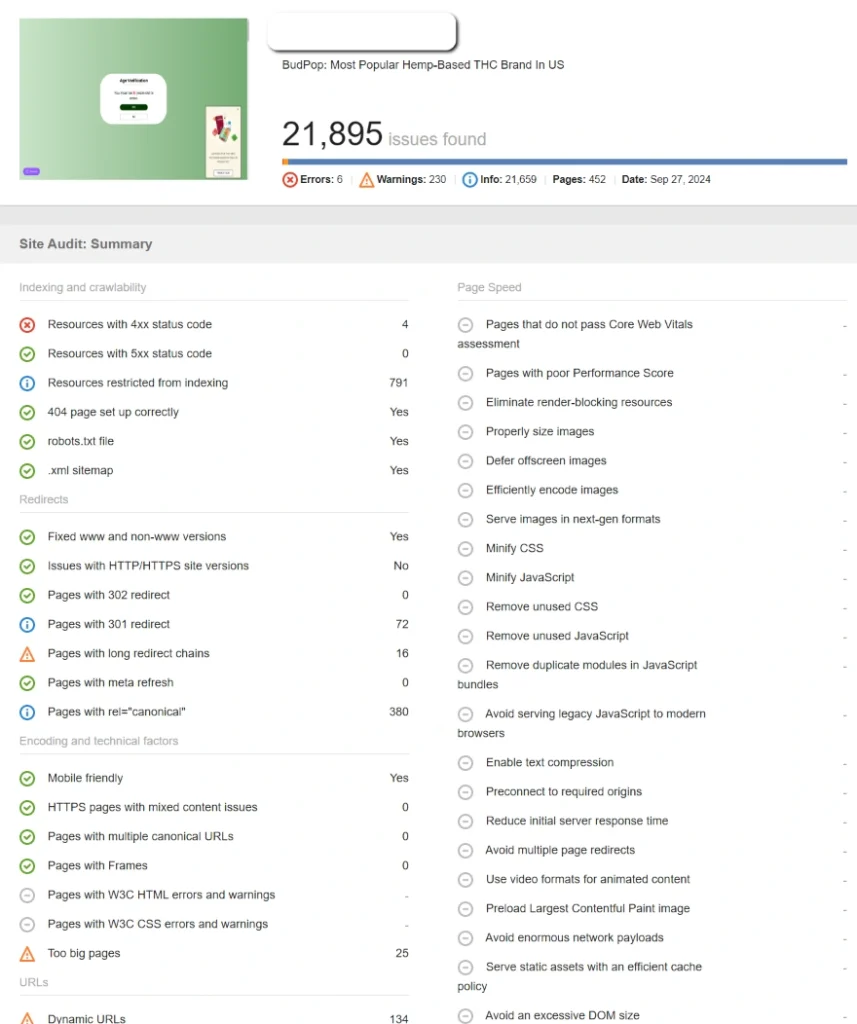

Semrush Technical Audit

Semrush’s site audit tool finds important issues that hurt website performance and search rankings.

It checks crawlability, indexation, page speed, and how well sites work on mobile.

The audit shows broken links, duplicate content, and slow-loading pages.

Focus on these problems based on how they affect user experience and SEO results.

Fix these errors to upgrade site function and help search engines understand your content more clearly, enhancing your SEO project.

Use Semrush to boost schema markup.

This helps you show up better in search results with rich snippets.

A good schema can really raise your click-through rates.

A complete technical SEO audit makes sure your website follows search engine rules.

This helps boost rankings, attract more traffic, and increase conversions.

It helps you find and fix technical problems that may hurt your site’s visibility and performance.

What Are The Main Website Technical Issues?

Slow website speed, problems with mobile optimization, crawl errors, broken links, and poor XML sitemaps are the main technical issues hurting websites.

Crawl errors stop search engines from correctly indexing the content on a website.

Broken links upset users and waste the crawl budget.

Ineffective XML sitemaps make it hard for search engines to understand how a site is set up.

Fixing these problems makes it easier for users and helps search engines find content.

This helps boost rankings and increase organic traffic.

Google and other search engines prefer websites that are well-built.

Main technical SEO issues?

Site Speed and Performance

Site speed greatly affects how users feel about a website and how it ranks in search engines.

A slow website annoys visitors, which leads to more people leaving the site quickly.

Google puts fast-loading sites first in search results.

Making sites faster means working on image sizes, server response times, and how well the code runs.

Browser caching and content delivery networks (CDNs) can enhance load times.

Quick sites help users feel happier and can lead to more sales.

Technical SEO practices that focus on speed are very important for a good online presence.

Website owners should make speed optimization a top priority to do well in today’s busy online world.

Mobile Optimization

Google ranks mobile-friendly sites higher in search results.

Mobile optimization means making designs that work well on different screen sizes.

This helps to provide a smooth experience for users on all devices.

Responsive design helps text, images, and navigation change easily.

Optimizing images and reducing code helps make loading times faster on mobile networks that are not very stable.

A good site that is easy to use boosts how people feel about it and helps it show up better in search engines.

Adapting to different screen sizes is key for a good online presence in today’s mobile-focused world.

Crawl errors and indexing issues

Crawl errors and indexing issues are problems that stop search engines from accessing and organizing website content correctly.

These problems can greatly affect how visible a site is in search results.

Server errors happen when a server is not working.

Soft 404s show up when pages are there, but they do not have important content.

Solutions involve improving website structure, increasing site speed, and setting up robots.txt files correctly.

Fixing these issues helps enhance search engine rankings and makes the user experience better.

You need to fix crawl errors and indexing problems using Google Search Console.

It makes sure that search engines like Google can read and list a website.

Broken links and 404 errors

Broken links and 404 errors hurt how users feel about a website and how it ranks in search engines.

This leads to more people leaving the site quickly and fewer chances to make sales.

These issues also stop search engines from crawling sites well and lower their overall visibility.

This keeps content working well. It helps users feel happy and makes search engines crawl better.

Fixing these errors can increase click-through rates.

Fixing 404 errors and changing broken links with our website maintenance service shows search engines that a website is well-cared for and reliable.

In Dublin’s busy digital market, fixing these technical SEO problems is key for improving online presence and creating better user experiences.

XML Sitemap

XML sitemap helps search engines understand the site’s layout.

It shows the key pages, images, and other files of a website.

It helps search engines find and read content better.

Creating an XML sitemap means looking at the website’s structure.

You need to include all useful URLs.

This process helps arrange content in a clear way.

Submitting the sitemap to search engines like Google makes sure they have the latest information about the site’s layout.

This can cause more frequent indexing. It may also lead to better search rankings.

XML sitemaps should be updated regularly.

Keeping the sitemap free of errors makes it work better.

A good XML sitemap is important for improving online visibility and boosting organic traffic.

It’s an important part of technical SEO strategies.

It helps search engines like Google understand and list a website better.

Robots.txt

The robots.txt file is important for SEO because it helps search engines know what content to index.

A well-managed robots.txt file can help you control your online presence.

It tells search engines which web pages to check and which ones to skip.

Managing robots.txt well stops usual technical problems.

Blocking important pages by mistake can hurt visibility in search results.

Regular audits of this file are needed to avoid problems and stay in line with our SEO plan.

A well-made robots.txt file helps make a website work better.

This leads to enhanced search rankings and more organic traffic.

Structured data/schema markup

This helps search engines understand what the page and site are about.

Additionally, when search engines find structured data, they can display rich snippets.

Rich snippets provide more details in search results can attract more clicks and can lead to better traffic.

Additionally, using structured data helps websites stand out and perform better in search engine results.

Schema markup points out specific details like products, reviews, and events.

It creates rich snippets in search results, which increases click-through rates.

Structured data helps people find information in voice searches and featured snippets.

This brings relevant visitors to websites. It’s important to set it up right to avoid losing chances.

Site architecture

Site architecture is the design of a website that arranges its content and pages.

A well-organized site helps visitors move around easily and find information quickly.

It also helps search engines to read and list pages easily and boosts their visibility in search results.

Bad URL structure can confuse both users and search engines.

Fixing problems like duplicate content and broken links is important for keeping the site trustworthy and high in search rankings.

Internal Linking

Good internal linking helps improve search rankings and shows users and search engines where to find important content.

It aids search engines in understanding the layout of the site and how the pages relate to each other..

It shows search engines that the content is important.

It gives users and search engines information about the content of the linked page.

A strong internal linking plan helps a website be more visible and easy to use.

Internal linking is an important part of technical SEO that you should not ignore.

Duplicate content

Search engines find it hard to pick the best choice when the same content shows up on several pages.

This leads to lower rankings and divided traffic.

It also hurts the trust and authority of the site.

This directs ranking signals and makes sure users find useful content.

Regular audits find problems with duplicate content.

These problems often arise from product descriptions, different URLs, or text that has been copied.

Google and other search engines punish sites that have too much duplicate content.

Webmasters need to fix this to enhance their search results and user experience.

Website Breadcrumbs

Website breadcrumbs are small text links that show the path a user has taken on a website.

They help users understand where they are and how to get back to previous pages.

They can improve the user experience and help visitors find what they need quickly.

Website breadcrumbs are links that help you see where you are on a site.

They are important for two main reasons:

- User Experience: Breadcrumbs help visitors find their way around your site. They can easily go back to earlier pages or higher categories. This simple way of moving around keeps users interested and lowers bounce rates.

- SEO Benefits: Search engines use breadcrumbs to see how your site is organized. This makes it easier for them to read your site and can improve your rankings. Breadcrumbs often show up in search results, which can lead to more clicks.

Good breadcrumbs can lead to higher rankings, more traffic, and better results for your website.

Google and other search engines like clear site structures.

HTTPS and SSL

HTTPS stands for Hypertext Transfer Protocol Secure, and tools that keep websites safe.

HTTPS and SSL are security tools that keep data safe between users and websites.

It shows that a site can provide a secure connection.

SSL, or Secure Sockets Layer, is a technique that helps protect data on the internet.

They are important because they help to keep personal information private.

A secure website ensures that people feel safer when sharing information online.

Search engines such as Google prefer secure websites when showing results.

This helps to build trust and may lead to higher sales. Users like to interact with sites they feel are safe.

Google favours secure websites in search results, highlighting the benefits of using HTTPS for SEO.

They also stop any technical problems that might confuse visitors and search engine crawlers.

People Also Asked

What is technical SEO vs on-page SEO?

Technical SEO looks at the backend parts of a website for crawling and indexing.

On-page SEO, on the other hand, improves content and HTML to make it relevant and engaging.

Technical SEO helps search engines get to a website and know what it is about.

It works on making the site faster, fixing broken links, and adding structured data.

This work improves how a site works for search engines.

On-page SEO focuses on the content and source code of each page.

It involves improving title tags, meta descriptions, and how keywords are used.

These things make the user experience better and add value to the content.

Both types of SEO are important for being seen and doing well.

Technical SEO builds a strong base.

On-page SEO improves the content that users read.

Together, they make a complete way to improve search engine optimization.

Google and Bing apply technical and on-page factors to decide how to rank websites.

Webmasters need to balance these parts to get the best results in search engine rankings.

What is the cost of a technical SEO audit in Dublin and Ireland?

A technical SEO audit in Ireland usually costs between €500 and €5,000.

The price can change depending on how complex the website is and how detailed the audit is.

A deep review finds important problems that hurt site performance.

These problems are slow loading times, broken links, and bad mobile use, all of which can be addressed by a technical SEO consultant.

Fixing these problems makes the user experience better and helps search engines rank higher.

This leads to more organic traffic and possible conversions.

Choosing the right provider is very important.

Quality audits look at all the key technical parts.

They give useful insights for clear improvements.

Investing in a technical SEO audit protects your business’s future online.

Knowing the costs and value lets you make good choices.

This helps boost your online presence in a busy digital world.

What does a technical SEO audit include?

The audit finds broken links and checks for duplicate content.

It looks at code quality, adds schema markup, tests how mobile-friendly the site is and analyzes the website’s structure.

Site speed is very important for how users feel about their experience.

When pages load slowly, more people leave the site quickly.

Crawlability and indexation help search engines get to and organize content in a good way.

Broken links, duplicate content, and poor coding can hurt a site’s performance.

Fixing these issues makes it easier for search engines to find the site and helps users navigate it better, ultimately improving local SEO.

Schema markup and structured data help search engines understand content more clearly.

This can result in rich snippets and higher click-through rates.

Mobile responsiveness is important since many users visit websites on mobile devices.

A mobile-friendly site is now needed.

Website structure should help users and crawlers move around easily.

A detailed technical SEO audit focuses on important areas to improve search rankings and increase conversion rates.

How long does a technical SEO audit take?

A technical SEO audit usually takes a few days to two weeks.

How long it takes depends on the size and complexity of the website.

Small websites may take just a few days for a full check.

Bigger, more detailed sites might need up to two weeks for a careful review.

During the audit, experts look at site speed, crawlability, and design.

They find problems like broken links, duplicate content, and poor coding.

A good audit gives useful information to improve online presence.

It paves the way for better visibility and higher success rates.

The audit’s detail makes sure that no problems are overlooked.

This method improves the website for search engines and users.

Google, Bing, and other search engines like websites that are well-optimized.

How often should you do a technical SEO audit?

Conduct a technical SEO audit at least two times a year.

More audits are needed for websites that have big changes.

Regular audits can help find and fix issues.

These issues include broken links, slow loading times, and bad mobile responsiveness.

Such problems can hurt user experience and search rankings.

Proactive audits help keep your site ready for search engines.

They make sure the content is easy to crawl and get indexed.

Search engine algorithms change a lot.

Regular audits help you make updates like schema markup or improvements to structured data.

These changes can increase your visibility in search results.

Google and other big search engines care about websites that are in good shape.

This makes it important to check your technical SEO often to keep your site performing well.