In the intricate world of technical SEO, the humble robots.txt file stands as a foundational pillar of site management and search engine communication. Far more than a simple technicality, it serves as a critical directive for search engine crawlers, directly influencing crawl efficiency, indexation patterns, and the strategic allocation of your site’s crawl budget. A meticulously configured robots.txt file empowers webmasters and SEO professionals to guide bots toward high-value content while safeguarding sensitive directories, thereby enhancing a website’s overall search performance and security posture. Misunderstanding its function, however, can lead to unintended consequences, such as the accidental blocking of vital resources or the inefficient crawling of duplicate content. This guide delves into the strategic implementation of robots.txt, demystifying its syntax, highlighting its substantial benefits for SEO, and outlining established best practices. By mastering this essential tool, you gain precise control over how search engines interact with your digital asset, ensuring they prioritize indexing your most important pages. Ultimately, a well-crafted robots.txt file is not merely a recommendation but a necessity for any website seeking to optimize its visibility, strengthen its technical health, and secure a competitive edge in organic search rankings.

Key Takeaways

- You need a robots.txt file on your website. It helps search engines find and read your site better.

- This file tells crawlers which pages to visit. It stops them from indexing the same content more than once. This way, it helps keep your site strong and improves its SEO.

- The robots.txt file also protects your site by stopping access to private areas and sensitive information. It makes sure that the most important content is found first when crawlers look at your site.

- You should update your robots.txt file often to match new SEO methods. Doing this will help manage your site better and improve performance.

- By using robots.txt, you can decide how search engines see your website. This will make your online presence stronger and easier to find.

What Is Robots.Txt?

Robots.txt is a text file that tells search engine bots which pages to visit on your website.

This small file is a way for your site to talk to search engines.

It lets them know what content they can look at.

By marking certain pages or sections as off-limits, you control how search engines handle your content.

A good robots.txt file helps search engines crawl better.

Search engines have a lot to do but limited resources.

By guiding them to the most important parts of your site, you make sure they use their time well.

Knowing about robots.txt and what it does is important for boosting your website’s visibility.

It makes sure search engines can explore your site well.

How Robots.Txt Works

Robots.txt acts as a guide for search engine bots.

It tells them which parts of a website to explore and index.

This text file is located in the root directory of your website.

When bots come to your site, they first look at this file.

It helps them know which sections they can visit.

If you want to stop bots from looking at private folders, you can list them in your robots.txt file.

You can do this by using the “Disallow” command.

It’s important to review and update this file often to align with your SEO plan.

By controlling how search engines connect with your site, you are taking steps to improve your online presence.

Knowing how to read and use a robots.txt file is a vital part of your SEO work.

Benefits of Using Robots.Txt

Using a robots.txt file helps you manage your online presence by showing search engines which parts of your site they should or should not check.

By following good technical SEO practices with robots.txt, you can boost your site’s performance in search engine results.

This way, you can stop duplicate content or less useful pages from being listed, which can weaken your site’s overall authority.

A properly set up robots.txt file helps you make the best use of your crawl budget.

Search engines give a certain amount of resources to read your site.

By guiding bots to your key pages, you ensure those pages are focused on first.

This leads to better indexing and visibility.

Using a robots.txt file can also improve your site’s security.

You can stop access to sensitive folders.

This keeps them safe from unwanted attention and threats.

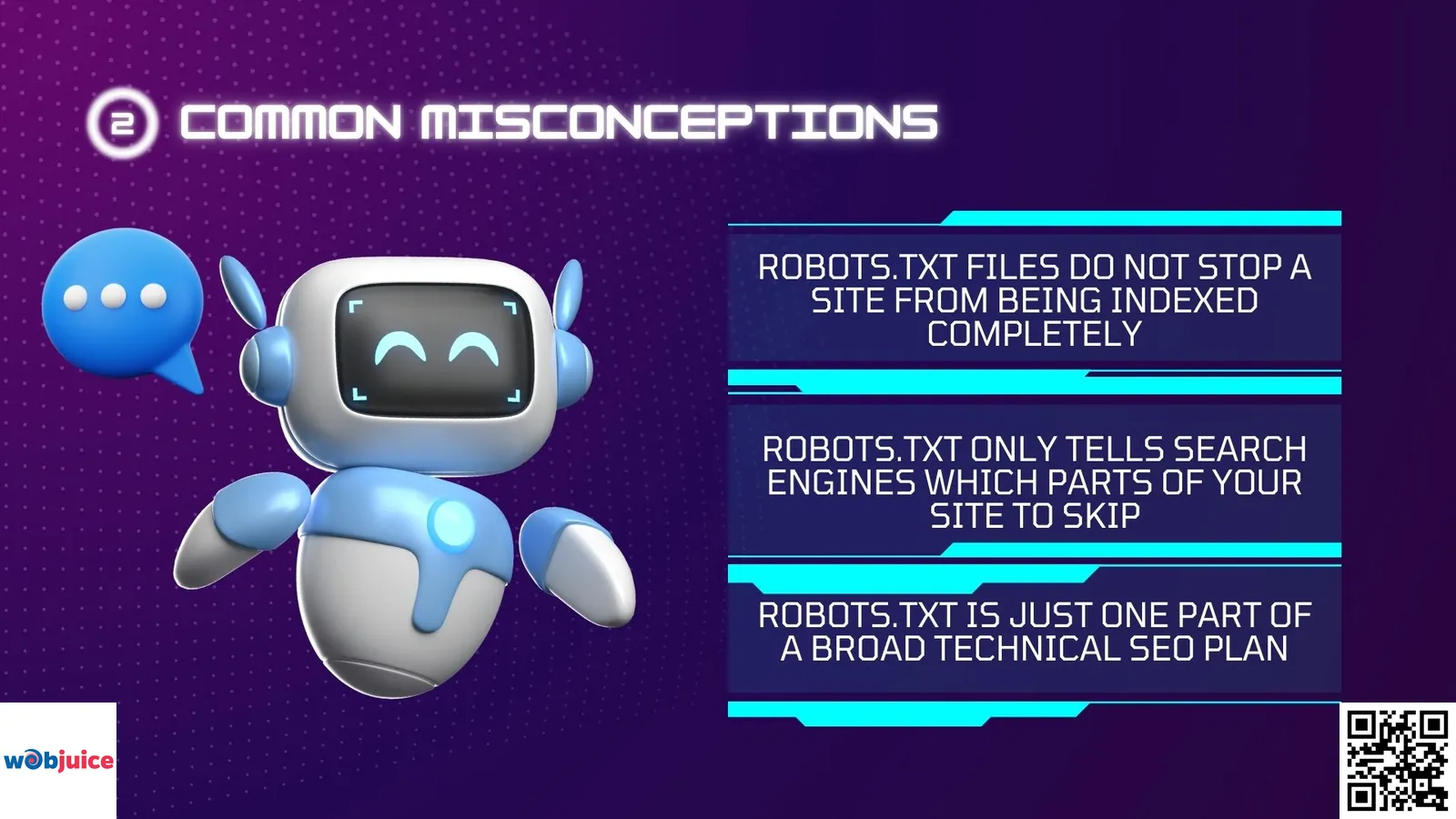

Common Misconceptions

Robots.txt files do not stop a site from being indexed completely.

Many people believe this, but it is not true.

Robots.txt only tells search engines which parts of your site to skip.

It helps control which pages show up in search results.

However, if it is not set up correctly, it may not prevent your site from being indexed.

Robots.txt is just one part of a broad technical SEO plan.

This plan has several factors that help a website perform well.

Best Practices for Implementation

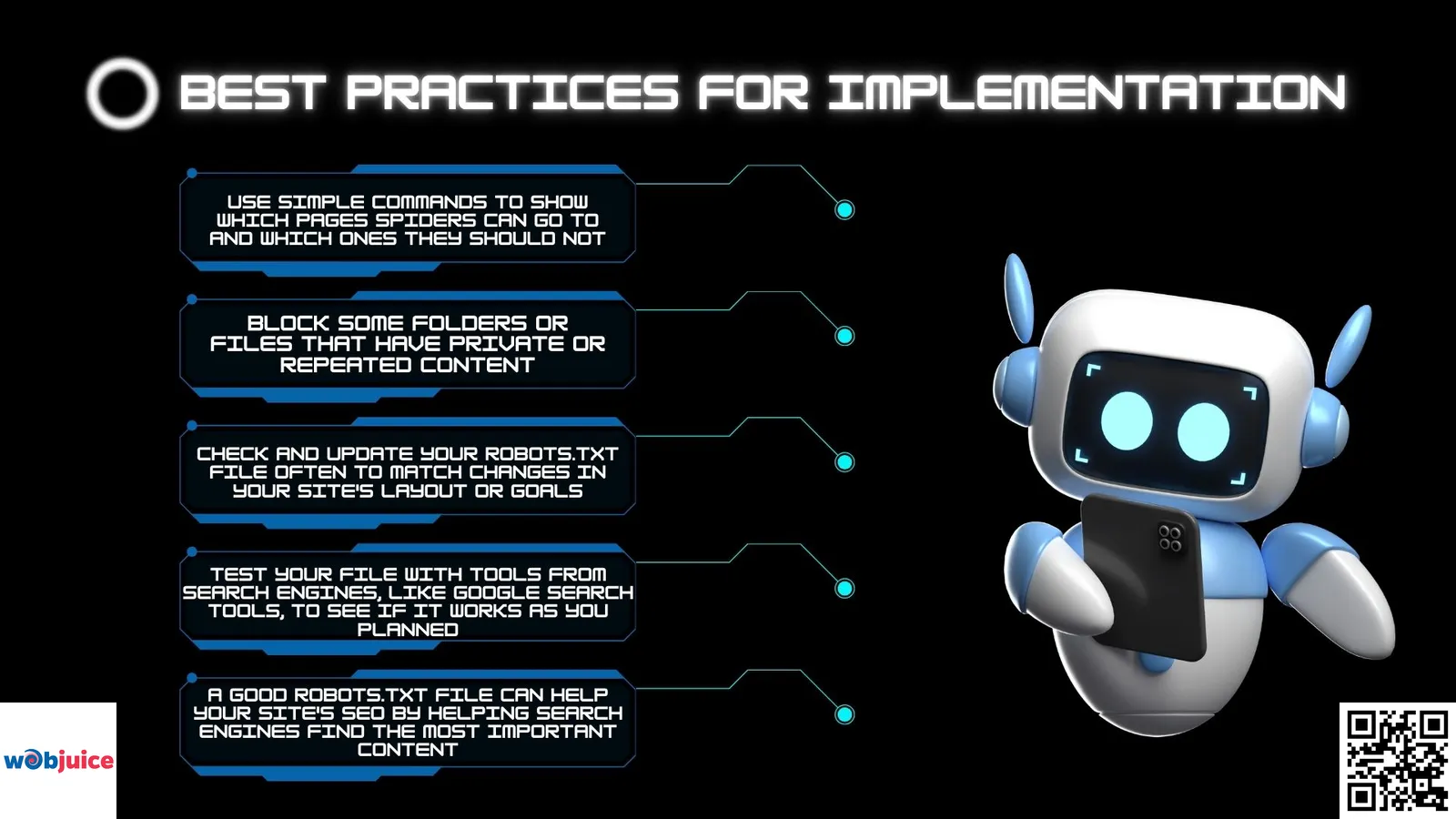

To use your robots.txt file well, you need to be clear.

Put your file in the main folder of your website.

Search engines will check there first.

Use simple commands to show which pages spiders can go to and which ones they should not.

Block some folders or files that have private or repeated content.

You should also think about using an XML sitemap.

It helps search engines see the full structure of your site.

Keep your grammar correct.

Even small mistakes can cause problems.

Use comments to organize and explain your instructions.

Check and update your robots.txt file often to match changes in your site’s layout or goals.

Test your file with tools from search engines, like Google Search tools, to see if it works as you planned.

A good robots.txt file can help your site’s SEO by helping search engines find the most important content.

Using these practices helps your site run better.

Troubleshooting Robots.Txt Issues

Use Google Search Console to find errors.

This tool points out problems like blocked paths or incorrect syntax.

If some pages are not showing in search results, check your robots.txt file carefully.

Incorrect user-agent directions are another regular issue.

Make sure your commands are for the correct search engines.

A command meant for Googlebot could block Bing’s crawler if it is not clear.

Regular checks of your robots.txt file help your SEO work.

Simple fixes to these problems can improve your site’s performance and make it seen more in search results.

A good robots.txt file helps search engines find your most important content.

It’s not just about blocking them.

Robots.txt FAQ: Technical SEO Guide

Master the implementation and optimization of your robots.txt file for better crawl efficiency, indexing control, and search performance.

1. Is a robots.txt file mandatory for my website to be indexed by Google?

No, a robots.txt file is not strictly mandatory for indexing. If absent, search engine crawlers will typically attempt to crawl and index all accessible pages on your site. However, professional SEOs consider it an essential best practice in technical SEO.

Why Implement It Anyway? Without a robots.txt file, you forfeit critical control over crawl budget allocation. Crawl budget refers to the finite number of pages search engine bots will crawl during each visit. By guiding bots away from low-value, duplicate, or sensitive content (like staging sites, admin folders, or internal search results), you optimize how this budget is spent.

Think of it not as a requirement for entry, but as a strategic tool for efficient site management and security. It helps prevent search engines from wasting resources on pages that shouldn’t be prioritized, ensuring your most important content gets discovered and indexed promptly.

2. Can I use robots.txt to block a page from appearing in Google Search results?

This is one of the most critical distinctions in technical SEO: The `robots.txt` file can request that crawlers do not crawl a specific URL, but it cannot prevent a page from being indexed.

Important Warning: If a page has inbound links from other sites or is listed in an XML sitemap, Google may still discover its URL and index it. In such cases, it might appear in search results with a “No Snippet” message because the crawler couldn’t access the content to generate a description.

To definitively block a page from the search index, you must use an indexing directive:<meta name="robots" content="noindex">

This meta tag must be placed in the HTML “ section of the page. For maximum efficiency and control, SEO professionals often combine both approaches:

Best Practice Combination: Use `robots.txt` to conserve crawl budget by disallowing the crawl, and implement the `noindex` tag (or an `X-Robots-Tag` HTTP header) to ensure the page is definitively excluded from the search index.

3. How do I find my robots.txt file, and what if I can’t find one?

Your robots.txt file must reside in the root directory of your primary domain (the top-level folder). You can easily view it by typing the following directly into your browser’s address bar:

https://yourdomain.com/robots.txtIf no page loads or you receive a 404 error, your site currently does not have a robots.txt file. This is common for new websites or simpler web projects.

Creating a Robots.txt File:

- Open any plain text editor (Notepad, TextEdit, VS Code, etc.)

- Add your directives (e.g.,

User-agent: *followed byAlloworDisallowrules) - Save the file with the exact name

robots.txt - Upload it to the main directory of your website via FTP or your hosting platform’s file manager

For CMS Users: Most Content Management Systems like WordPress have plugins (Yoast SEO, Rank Math) or built-in settings that can generate and manage this file automatically, often through the SEO settings panel.

4. What’s the difference between “Disallow” in robots.txt and a “noindex” meta tag?

These are two fundamentally different instructions that serve separate purposes in the SEO technical stack:

Disallow (in robots.txt): This is a crawl directive. It instructs compliant search engine crawlers not to request the content of a specified URL or path. The crawler saves resources by not fetching the page, which also conserves your server bandwidth. However, the page’s URL can still be discovered and potentially indexed.

Noindex (meta tag or HTTP header): This is an indexing directive. Placed in the HTML “ section of a page (or sent via an HTTP header), it explicitly tells search engines not to include that page in their search index. Critically, the crawler must first be allowed to fetch the page to read this instruction.

Practical Application Guide:

- Use `Disallow` for: Resources you don’t want crawled at all — script files (

.js,.css), infinite calendar pages, duplicate parameter URLs, staging environments. - Use `noindex` for: Pages you don’t want in search results but may need to be accessible for other reasons — thank-you/confirmation pages, internal search result pages, temporary campaign pages, filtered product lists.

5. How often should I check or update my robots.txt file?

You should conduct a formal robots.txt audit at least quarterly as part of your routine technical SEO maintenance. Additionally, perform an immediate review after any significant website structural changes.

Key Triggers for an Immediate Review:

- Site Redesign or Migration: New site architectures often introduce new directories, parameters, or content types that may need regulation.

- Launching New Sections: Ensure new content areas (blogs, forums, membership portals) are correctly configured for crawling.

- SEO Strategy Shifts: Changes in content consolidation, canonicalization strategies, or international targeting.

- Security Updates: Adding new administrative or development areas that should remain private.

Essential Monitoring Tools:

Utilize Google Search Console regularly:

- Check the “robots.txt Tester” tool under “Settings” to validate syntax and directives.

- Monitor the “Coverage” report for unexpected “Blocked by robots.txt” errors that might indicate you’re accidentally hiding important content.

- Use the “URL Inspection” tool to test how Googlebot sees specific pages in relation to your robots.txt rules.

Proactive management prevents outdated rules from accidentally blocking important content, ensuring your robots.txt file remains a strategic asset rather than a technical liability to your search performance.

Summary

Mastering your robots.txt file is a non-negotiable component of a robust technical SEO strategy. It transcends basic crawl control, evolving into a strategic asset for site integrity and performance.

To capitalize on its full potential, remember:

Precision is Paramount: Accurate syntax and clear directives prevent costly errors that can hide critical content from search engines.

Crawl Budget Optimization: By disallowing crawl-wasteful areas like internal search results or admin panels, you channel bot attention to your cornerstone content, improving indexing speed and quality.

Security & Content Protection: Use robots.txt as the first line of defense to deter bots from sensitive files and private directories, complementing more robust security measures.

Dynamic Management: Treat your robots.txt as a living document. Regular audits and updates in line with site changes and evolving SEO goals are essential for maintaining its effectiveness.

In essence, a strategically implemented robots.txt file acts as a silent conductor for search engine crawlers, orchestrating a more efficient, secure, and focused indexing process. By adopting the best practices outlined, you assert greater control over your site’s search engine footprint, laying a solid foundation for enhanced visibility, improved user experience, and sustained organic growth. Proactively manage this powerful tool to ensure your most valuable pages receive the spotlight they deserve in search results.